Reading and Writing Aws S3 Bucket With Python

Scout Now This tutorial has a related video course created by the Existent Python team. Watch it together with the written tutorial to deepen your understanding: Python, Boto3, and AWS S3: Demystified

Amazon Web Services (AWS) has become a leader in cloud computing. One of its core components is S3, the object storage service offered by AWS. With its impressive availability and durability, it has become the standard way to shop videos, images, and information. You can combine S3 with other services to build infinitely scalable applications.

Boto3 is the name of the Python SDK for AWS. It allows you to directly create, update, and delete AWS resource from your Python scripts.

If you've had some AWS exposure before, have your own AWS business relationship, and want to take your skills to the next level by starting to employ AWS services from within your Python code, then keep reading.

Past the finish of this tutorial, you'll:

- Be confident working with buckets and objects directly from your Python scripts

- Know how to avoid common pitfalls when using Boto3 and S3

- Empathize how to set up your data from the start to avert performance issues afterward

- Acquire how to configure your objects to take advantage of S3's best features

Before exploring Boto3's characteristics, you will starting time see how to configure the SDK on your machine. This footstep will gear up you upwards for the residuum of the tutorial.

Installation

To install Boto3 on your estimator, go to your terminal and run the post-obit:

Y'all've got the SDK. Only, you won't be able to apply it right now, because it doesn't know which AWS account it should connect to.

To make it run confronting your AWS account, you lot'll need to provide some valid credentials. If you already have an IAM user that has total permissions to S3, you tin can use those user's credentials (their admission key and their cloak-and-dagger access primal) without needing to create a new user. Otherwise, the easiest way to do this is to create a new AWS user and and then store the new credentials.

To create a new user, get to your AWS account, then become to Services and select IAM. And so choose Users and click on Add user.

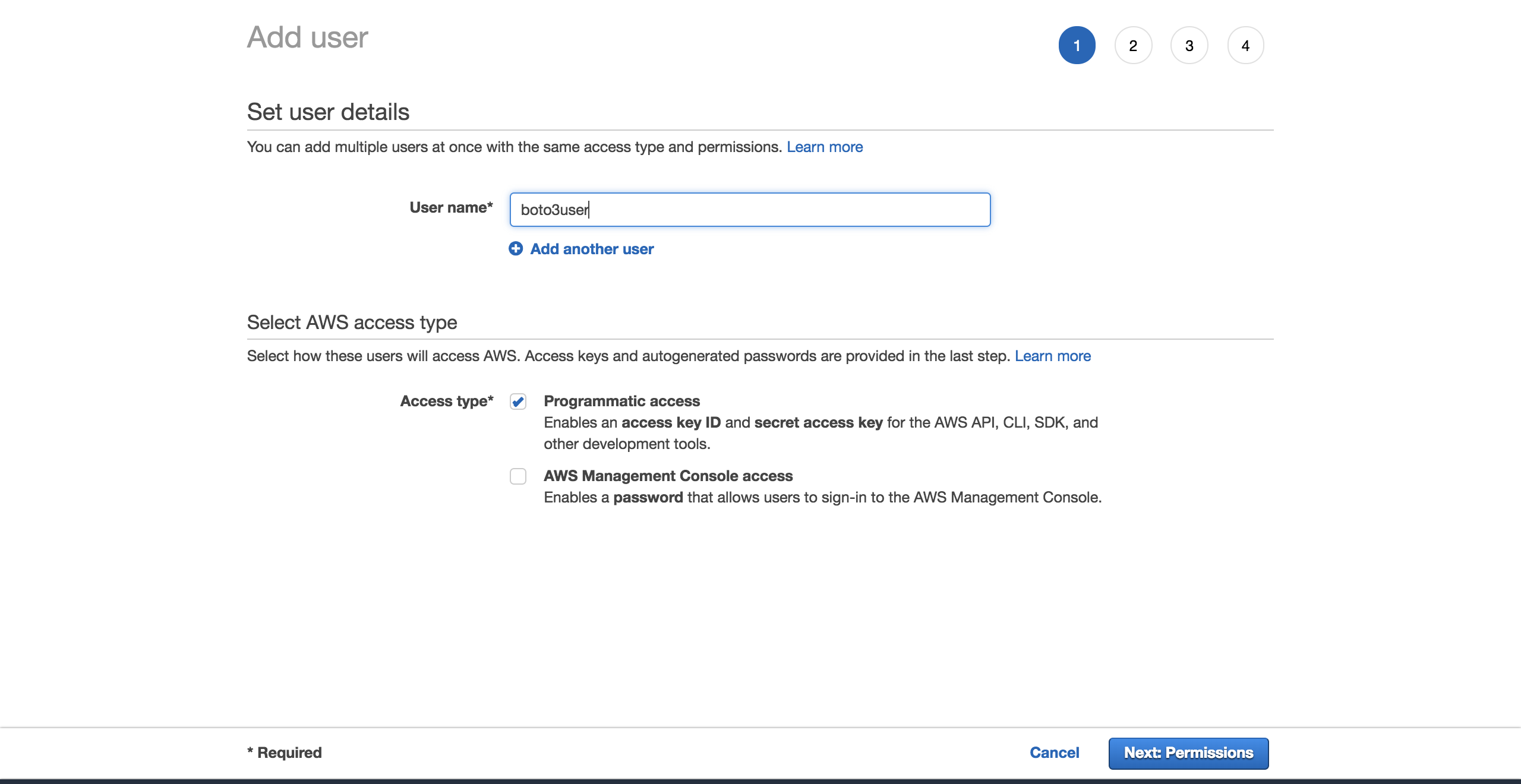

Requite the user a name (for example, boto3user). Enable programmatic admission. This will ensure that this user volition be able to work with whatever AWS supported SDK or make dissever API calls:

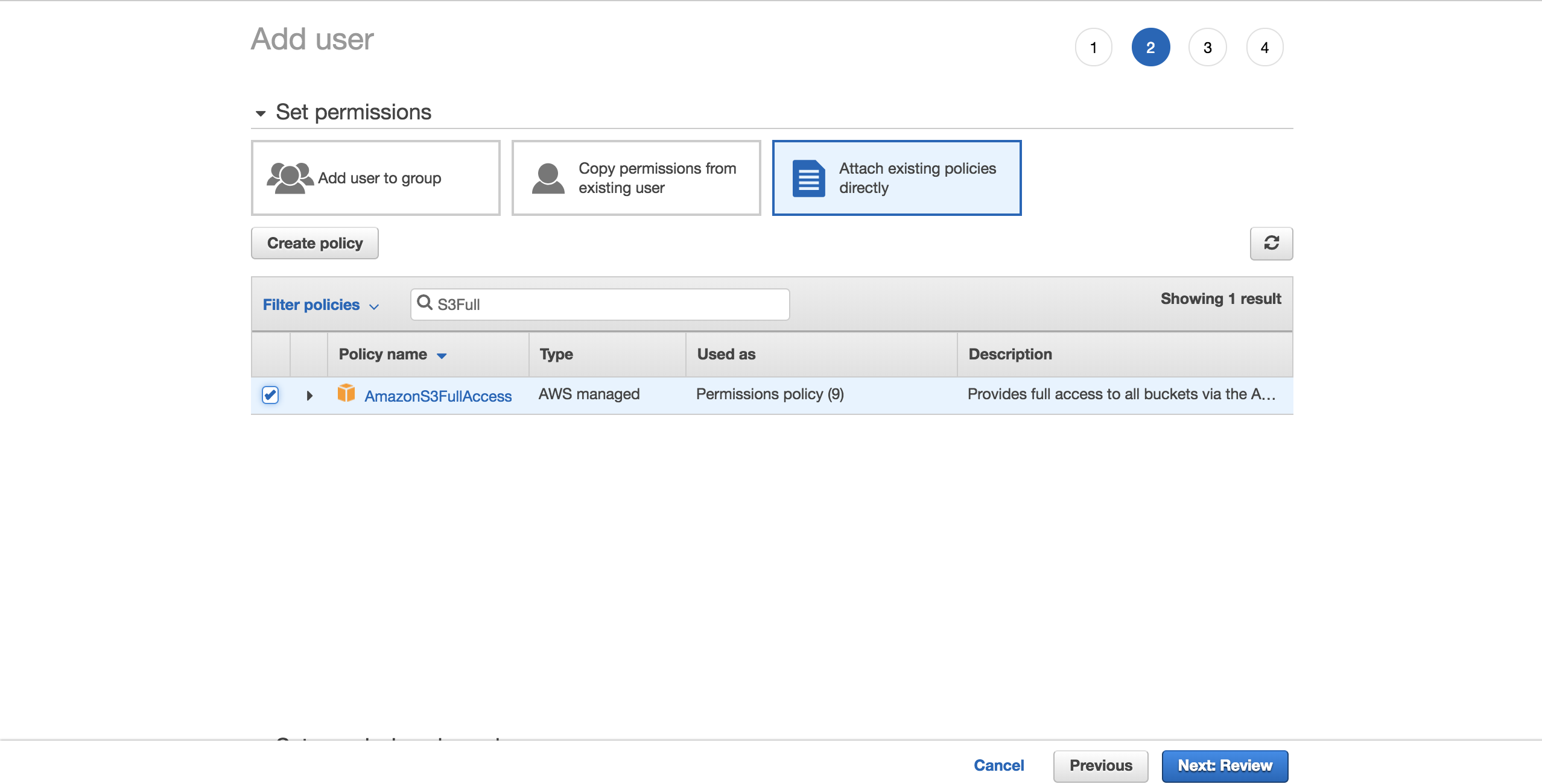

To proceed things unproblematic, choose the preconfigured AmazonS3FullAccess policy. With this policy, the new user will be able to have full control over S3. Click on Next: Review:

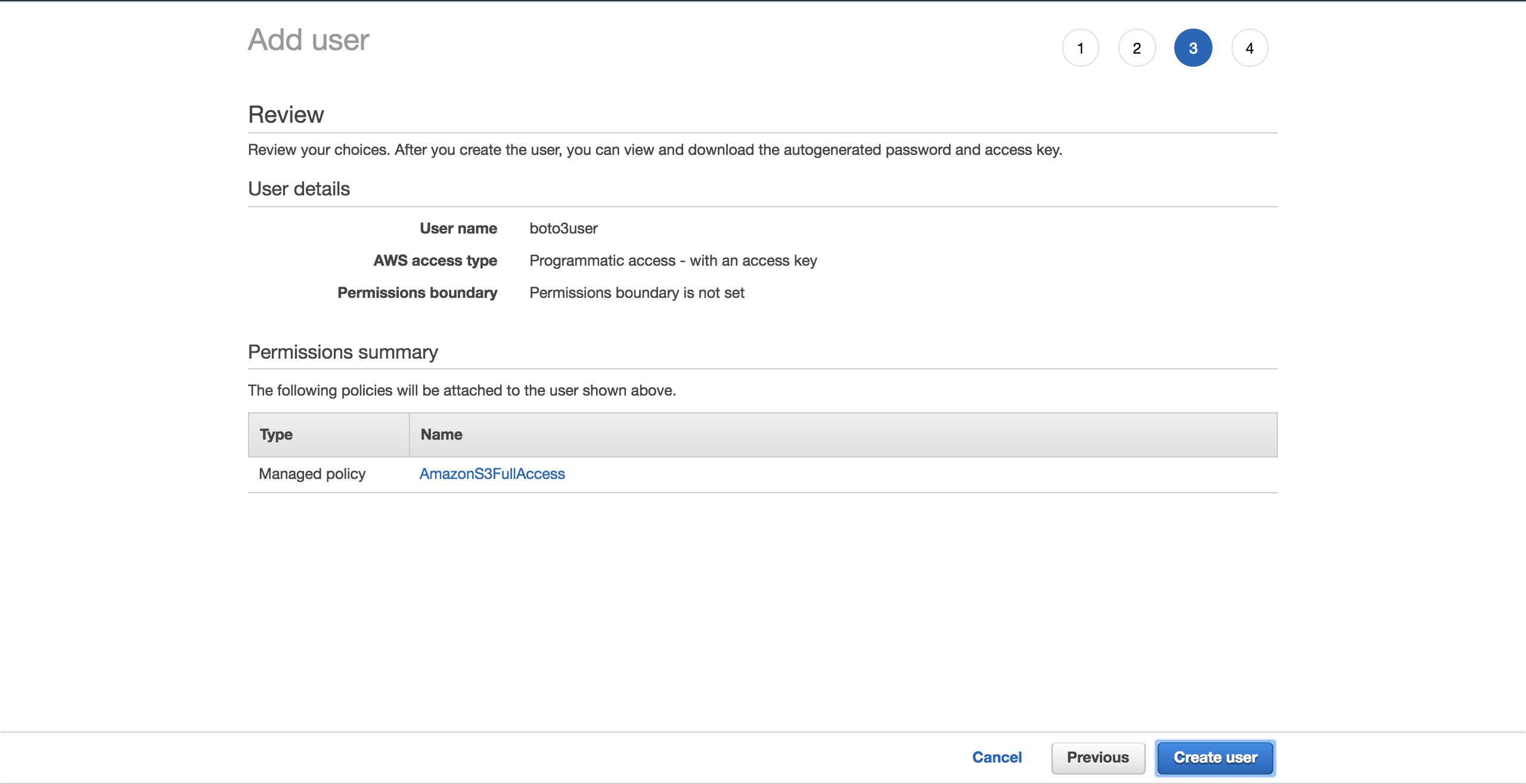

Select Create user:

A new screen will prove you the user's generated credentials. Click on the Download .csv button to make a copy of the credentials. Y'all will demand them to complete your setup.

At present that you have your new user, create a new file, ~/.aws/credentials:

$ touch on ~/.aws/credentials Open the file and paste the structure below. Fill in the placeholders with the new user credentials you accept downloaded:

[default] aws_access_key_id = YOUR_ACCESS_KEY_ID aws_secret_access_key = YOUR_SECRET_ACCESS_KEY Save the file.

Now that y'all accept fix these credentials, you have a default profile, which volition exist used past Boto3 to collaborate with your AWS account.

At that place is one more configuration to ready: the default region that Boto3 should interact with. Yous can cheque out the complete table of the supported AWS regions. Choose the region that is closest to you lot. Copy your preferred region from the Region column. In my case, I am using european union-west-1 (Republic of ireland).

Create a new file, ~/.aws/config:

Add the following and supersede the placeholder with the region y'all take copied:

[default] region = YOUR_PREFERRED_REGION Salvage your file.

You are now officially set up for the residual of the tutorial.

Next, you lot will encounter the different options Boto3 gives you to connect to S3 and other AWS services.

Client Versus Resource

At its core, all that Boto3 does is call AWS APIs on your behalf. For the majority of the AWS services, Boto3 offers two distinct means of accessing these abstracted APIs:

- Client: low-level service access

- Resource: college-level object-oriented service admission

You can use either to interact with S3.

To connect to the low-level client interface, yous must employ Boto3'southward client(). You then laissez passer in the name of the service you want to connect to, in this instance, s3:

import boto3 s3_client = boto3 . client ( 's3' ) To connect to the loftier-level interface, you lot'll follow a similar approach, merely utilize resource():

import boto3 s3_resource = boto3 . resource ( 's3' ) Yous've successfully connected to both versions, only at present you lot might be wondering, "Which one should I apply?"

With clients, there is more programmatic work to be washed. The bulk of the client operations give you a lexicon response. To get the verbal data that yous demand, you lot'll have to parse that lexicon yourself. With resource methods, the SDK does that piece of work for yous.

With the customer, you might see some slight functioning improvements. The disadvantage is that your code becomes less readable than it would be if you were using the resource. Resources offer a better abstraction, and your code will be easier to comprehend.

Understanding how the client and the resource are generated is besides important when you're considering which one to choose:

- Boto3 generates the customer from a JSON service definition file. The client's methods support every single type of interaction with the target AWS service.

- Resources, on the other hand, are generated from JSON resources definition files.

Boto3 generates the client and the resources from different definitions. Equally a result, you lot may detect cases in which an operation supported past the customer isn't offered by the resource. Here's the interesting office: y'all don't need to modify your code to use the client everywhere. For that operation, you lot tin can admission the customer straight via the resource like and so: s3_resource.meta.customer.

One such client performance is .generate_presigned_url(), which enables you to give your users access to an object inside your bucket for a set period of time, without requiring them to accept AWS credentials.

Common Operations

Now that you lot know about the differences between clients and resources, allow's start using them to build some new S3 components.

Creating a Bucket

To start off, you need an S3 bucket. To create one programmatically, you must start choose a name for your bucket. Remember that this name must be unique throughout the whole AWS platform, as bucket names are DNS compliant. If you try to create a saucepan, but another user has already claimed your desired bucket name, your code will fail. Instead of success, y'all will run across the post-obit error: botocore.errorfactory.BucketAlreadyExists.

You tin can increment your chance of success when creating your saucepan by picking a random proper name. You can generate your own function that does that for you. In this implementation, you'll see how using the uuid module will assist you achieve that. A UUID4's string representation is 36 characters long (including hyphens), and you can add together a prefix to specify what each bucket is for.

Here's a way you can achieve that:

import uuid def create_bucket_name ( bucket_prefix ): # The generated bucket name must exist betwixt 3 and 63 chars long return '' . join ([ bucket_prefix , str ( uuid . uuid4 ())]) Y'all've got your bucket name, but now in that location's 1 more than thing you need to exist aware of: unless your region is in the United States, you'll demand to ascertain the region explicitly when you are creating the bucket. Otherwise yous will get an IllegalLocationConstraintException.

To exemplify what this means when you're creating your S3 bucket in a non-US region, take a look at the code below:

s3_resource . create_bucket ( Bucket = YOUR_BUCKET_NAME , CreateBucketConfiguration = { 'LocationConstraint' : 'eu-w-ane' }) You need to provide both a bucket name and a saucepan configuration where you must specify the region, which in my case is european union-w-i.

This isn't ideal. Imagine that yous want to have your code and deploy it to the cloud. Your task will go increasingly more difficult because you've at present hardcoded the region. You lot could refactor the region and transform information technology into an environment variable, but then y'all'd have one more thing to manage.

Luckily, there is a better way to go the region programatically, by taking advantage of a session object. Boto3 volition create the session from your credentials. You only demand to take the region and laissez passer it to create_bucket() equally its LocationConstraint configuration. Here's how to do that:

def create_bucket ( bucket_prefix , s3_connection ): session = boto3 . session . Session () current_region = session . region_name bucket_name = create_bucket_name ( bucket_prefix ) bucket_response = s3_connection . create_bucket ( Bucket = bucket_name , CreateBucketConfiguration = { 'LocationConstraint' : current_region }) print ( bucket_name , current_region ) render bucket_name , bucket_response The nice office is that this code works no matter where you want to deploy it: locally/EC2/Lambda. Moreover, yous don't need to hardcode your region.

As both the customer and the resource create buckets in the same way, you can pass either 1 equally the s3_connection parameter.

Y'all'll now create ii buckets. First create 1 using the customer, which gives yous back the bucket_response every bit a lexicon:

>>>

>>> first_bucket_name , first_response = create_bucket ( ... bucket_prefix = 'firstpythonbucket' , ... s3_connection = s3_resource . meta . client ) firstpythonbucket7250e773-c4b1-422a-b51f-c45a52af9304 eu-west-1 >>> first_response {'ResponseMetadata': {'RequestId': 'E1DCFE71EDE7C1EC', 'HostId': 'r3AP32NQk9dvbHSEPIbyYADT769VQEN/+xT2BPM6HCnuCb3Z/GhR2SBP+GM7IjcxbBN7SQ+k+9B=', 'HTTPStatusCode': 200, 'HTTPHeaders': {'x-amz-id-2': 'r3AP32NQk9dvbHSEPIbyYADT769VQEN/+xT2BPM6HCnuCb3Z/GhR2SBP+GM7IjcxbBN7SQ+one thousand+9B=', '10-amz-request-id': 'E1DCFE71EDE7C1EC', 'date': 'Fri, 05 Oct 2022 15:00:00 GMT', 'location': 'http://firstpythonbucket7250e773-c4b1-422a-b51f-c45a52af9304.s3.amazonaws.com/', 'content-length': '0', 'server': 'AmazonS3'}, 'RetryAttempts': 0}, 'Location': 'http://firstpythonbucket7250e773-c4b1-422a-b51f-c45a52af9304.s3.amazonaws.com/'} Then create a 2nd saucepan using the resource, which gives you back a Bucket instance as the bucket_response:

>>>

>>> second_bucket_name , second_response = create_bucket ( ... bucket_prefix = 'secondpythonbucket' , s3_connection = s3_resource ) secondpythonbucket2d5d99c5-ab96-4c30-b7f7-443a95f72644 eu-west-i >>> second_response s3.Bucket(name='secondpythonbucket2d5d99c5-ab96-4c30-b7f7-443a95f72644') Yous've got your buckets. Side by side, you lot'll desire to showtime adding some files to them.

Naming Your Files

You can name your objects by using standard file naming conventions. Yous tin can use whatsoever valid name. In this article, y'all'll look at a more specific case that helps you understand how S3 works nether the hood.

If you're planning on hosting a large number of files in your S3 bucket, there's something yous should go along in heed. If all your file names have a deterministic prefix that gets repeated for every file, such as a timestamp format like "YYYY-MM-DDThh:mm:ss", then you will soon notice that you're running into performance bug when you lot're trying to interact with your bucket.

This will happen because S3 takes the prefix of the file and maps it onto a partition. The more files you add, the more will be assigned to the aforementioned partition, and that partitioning will be very heavy and less responsive.

What can you lot do to go on that from happening?

The easiest solution is to randomize the file name. You can imagine many unlike implementations, but in this example, you'll employ the trusted uuid module to assistance with that. To brand the file names easier to read for this tutorial, you'll be taking the first six characters of the generated number's hex representation and concatenate it with your base file name.

The helper office below allows yous to pass in the number of bytes y'all desire the file to have, the file proper noun, and a sample content for the file to be repeated to make upward the desired file size:

def create_temp_file ( size , file_name , file_content ): random_file_name = '' . join ([ str ( uuid . uuid4 () . hex [: 6 ]), file_name ]) with open ( random_file_name , 'west' ) as f : f . write ( str ( file_content ) * size ) return random_file_name Create your outset file, which yous'll be using shortly:

first_file_name = create_temp_file ( 300 , 'firstfile.txt' , 'f' ) By adding randomness to your file names, you tin can efficiently distribute your data within your S3 bucket.

Creating Bucket and Object Instances

The next step later creating your file is to see how to integrate it into your S3 workflow.

This is where the resources's classes play an important function, as these abstractions make it piece of cake to piece of work with S3.

By using the resource, you take access to the high-level classes (Bucket and Object). This is how you can create one of each:

first_bucket = s3_resource . Bucket ( name = first_bucket_name ) first_object = s3_resource . Object ( bucket_name = first_bucket_name , key = first_file_name ) The reason y'all accept not seen any errors with creating the first_object variable is that Boto3 doesn't brand calls to AWS to create the reference. The bucket_name and the cardinal are called identifiers, and they are the necessary parameters to create an Object. Whatever other attribute of an Object, such as its size, is lazily loaded. This means that for Boto3 to go the requested attributes, information technology has to make calls to AWS.

Understanding Sub-resources

Bucket and Object are sub-resources of one another. Sub-resource are methods that create a new instance of a child resource. The parent's identifiers get passed to the child resource.

If y'all accept a Bucket variable, yous can create an Object directly:

first_object_again = first_bucket . Object ( first_file_name ) Or if you have an Object variable, and then y'all tin can go the Bucket:

first_bucket_again = first_object . Bucket () Groovy, you at present understand how to generate a Saucepan and an Object. Next, you lot'll get to upload your newly generated file to S3 using these constructs.

Uploading a File

There are three ways you tin can upload a file:

- From an

Objectexample - From a

Bucketinstance - From the

customer

In each example, you have to provide the Filename, which is the path of the file you want to upload. You'll now explore the three alternatives. Feel costless to option whichever you lot like most to upload the first_file_name to S3.

Object Instance Version

Y'all can upload using an Object instance:

s3_resource . Object ( first_bucket_name , first_file_name ) . upload_file ( Filename = first_file_name ) Or you can use the first_object example:

first_object . upload_file ( first_file_name ) Bucket Instance Version

Here'south how you tin upload using a Bucket instance:

s3_resource . Bucket ( first_bucket_name ) . upload_file ( Filename = first_file_name , Fundamental = first_file_name ) Customer Version

Y'all tin can likewise upload using the client:

s3_resource . meta . client . upload_file ( Filename = first_file_name , Bucket = first_bucket_name , Fundamental = first_file_name ) You take successfully uploaded your file to S3 using one of the three bachelor methods. In the upcoming sections, you'll mainly work with the Object class, every bit the operations are very similar between the customer and the Bucket versions.

Downloading a File

To download a file from S3 locally, you'll follow similar steps equally you did when uploading. Merely in this instance, the Filename parameter will map to your desired local path. This time, it volition download the file to the tmp directory:

s3_resource . Object ( first_bucket_name , first_file_name ) . download_file ( f '/tmp/ { first_file_name } ' ) # Python iii.vi+ You've successfully downloaded your file from S3. Next, you lot'll see how to re-create the same file between your S3 buckets using a single API call.

Copying an Object Between Buckets

If you need to copy files from ane bucket to another, Boto3 offers yous that possibility. In this case, yous'll copy the file from the first bucket to the second, using .re-create():

def copy_to_bucket ( bucket_from_name , bucket_to_name , file_name ): copy_source = { 'Bucket' : bucket_from_name , 'Key' : file_name } s3_resource . Object ( bucket_to_name , file_name ) . re-create ( copy_source ) copy_to_bucket ( first_bucket_name , second_bucket_name , first_file_name ) Deleting an Object

Allow's delete the new file from the 2nd bucket by calling .delete() on the equivalent Object case:

s3_resource . Object ( second_bucket_name , first_file_name ) . delete () You've now seen how to apply S3'southward core operations. You're prepare to take your noesis to the next level with more circuitous characteristics in the upcoming sections.

Advanced Configurations

In this section, you're going to explore more than elaborate S3 features. You'll see examples of how to utilize them and the benefits they can bring to your applications.

ACL (Access Command Lists)

Access Command Lists (ACLs) help y'all manage access to your buckets and the objects within them. They are considered the legacy way of administrating permissions to S3. Why should you lot know nigh them? If you have to manage access to individual objects, then you lot would use an Object ACL.

Past default, when you upload an object to S3, that object is private. If you want to make this object bachelor to someone else, you tin set the object's ACL to be public at creation time. Here's how you lot upload a new file to the bucket and make it accessible to everyone:

second_file_name = create_temp_file ( 400 , 'secondfile.txt' , 's' ) second_object = s3_resource . Object ( first_bucket . name , second_file_name ) second_object . upload_file ( second_file_name , ExtraArgs = { 'ACL' : 'public-read' }) You tin go the ObjectAcl instance from the Object, as it is one of its sub-resource classes:

second_object_acl = second_object . Acl () To see who has admission to your object, use the grants attribute:

>>>

>>> second_object_acl . grants [{'Grantee': {'DisplayName': 'name', 'ID': '24aafdc2053d49629733ff0141fc9fede3bf77c7669e4fa2a4a861dd5678f4b5', 'Blazon': 'CanonicalUser'}, 'Permission': 'FULL_CONTROL'}, {'Grantee': {'Blazon': 'Group', 'URI': 'http://acs.amazonaws.com/groups/global/AllUsers'}, 'Permission': 'READ'}] Yous tin make your object private over again, without needing to re-upload it:

>>>

>>> response = second_object_acl . put ( ACL = 'private' ) >>> second_object_acl . grants [{'Grantee': {'DisplayName': 'name', 'ID': '24aafdc2053d49629733ff0141fc9fede3bf77c7669e4fa2a4a861dd5678f4b5', 'Type': 'CanonicalUser'}, 'Permission': 'FULL_CONTROL'}] You accept seen how yous can use ACLs to manage access to private objects. Next, you'll see how you lot can add an extra layer of security to your objects by using encryption.

Encryption

With S3, you lot tin protect your data using encryption. You'll explore server-side encryption using the AES-256 algorithm where AWS manages both the encryption and the keys.

Create a new file and upload information technology using ServerSideEncryption:

third_file_name = create_temp_file ( 300 , 'thirdfile.txt' , 't' ) third_object = s3_resource . Object ( first_bucket_name , third_file_name ) third_object . upload_file ( third_file_name , ExtraArgs = { 'ServerSideEncryption' : 'AES256' }) You can check the algorithm that was used to encrypt the file, in this case AES256:

>>>

>>> third_object . server_side_encryption 'AES256' You now sympathize how to add an extra layer of protection to your objects using the AES-256 server-side encryption algorithm offered by AWS.

Storage

Every object that y'all add to your S3 bucket is associated with a storage grade. All the bachelor storage classes offer loftier immovability. Yous cull how you want to store your objects based on your application'south performance access requirements.

At nowadays, you can use the following storage classes with S3:

- STANDARD: default for often accessed data

- STANDARD_IA: for infrequently used data that needs to be retrieved apace when requested

- ONEZONE_IA: for the same use case as STANDARD_IA, just stores the data in i Availability Zone instead of three

- REDUCED_REDUNDANCY: for frequently used noncritical data that is easily reproducible

If you want to modify the storage class of an existing object, you need to recreate the object.

For example, reupload the third_object and gear up its storage class to Standard_IA:

third_object . upload_file ( third_file_name , ExtraArgs = { 'ServerSideEncryption' : 'AES256' , 'StorageClass' : 'STANDARD_IA' }) Reload the object, and you can see its new storage class:

>>>

>>> third_object . reload () >>> third_object . storage_class 'STANDARD_IA' Versioning

You should apply versioning to keep a complete record of your objects over time. It also acts as a protection mechanism confronting accidental deletion of your objects. When y'all asking a versioned object, Boto3 volition retrieve the latest version.

When you lot add a new version of an object, the storage that object takes in total is the sum of the size of its versions. So if you're storing an object of 1 GB, and you lot create 10 versions, so yous have to pay for 10GB of storage.

Enable versioning for the first bucket. To do this, y'all need to use the BucketVersioning class:

def enable_bucket_versioning ( bucket_name ): bkt_versioning = s3_resource . BucketVersioning ( bucket_name ) bkt_versioning . enable () print ( bkt_versioning . condition ) >>>

>>> enable_bucket_versioning ( first_bucket_name ) Enabled Then create ii new versions for the commencement file Object, one with the contents of the original file and ane with the contents of the third file:

s3_resource . Object ( first_bucket_name , first_file_name ) . upload_file ( first_file_name ) s3_resource . Object ( first_bucket_name , first_file_name ) . upload_file ( third_file_name ) At present reupload the second file, which volition create a new version:

s3_resource . Object ( first_bucket_name , second_file_name ) . upload_file ( second_file_name ) Yous can remember the latest available version of your objects similar so:

>>>

>>> s3_resource . Object ( first_bucket_name , first_file_name ) . version_id 'eQgH6IC1VGcn7eXZ_.ayqm6NdjjhOADv' In this department, yous've seen how to work with some of the most important S3 attributes and add together them to your objects. Side by side, you'll see how to easily traverse your buckets and objects.

Traversals

If you need to think information from or employ an operation to all your S3 resources, Boto3 gives yous several ways to iteratively traverse your buckets and your objects. You'll start by traversing all your created buckets.

Bucket Traversal

To traverse all the buckets in your account, you can use the resource'south buckets attribute alongside .all(), which gives you the complete list of Bucket instances:

>>>

>>> for bucket in s3_resource . buckets . all (): ... impress ( bucket . proper noun ) ... firstpythonbucket7250e773-c4b1-422a-b51f-c45a52af9304 secondpythonbucket2d5d99c5-ab96-4c30-b7f7-443a95f72644 You can utilise the client to retrieve the saucepan information also, but the lawmaking is more complex, every bit you demand to extract it from the dictionary that the client returns:

>>>

>>> for bucket_dict in s3_resource . meta . client . list_buckets () . become ( 'Buckets' ): ... print ( bucket_dict [ 'Name' ]) ... firstpythonbucket7250e773-c4b1-422a-b51f-c45a52af9304 secondpythonbucket2d5d99c5-ab96-4c30-b7f7-443a95f72644 Yous have seen how to iterate through the buckets you have in your account. In the upcoming department, you'll selection i of your buckets and iteratively view the objects it contains.

Object Traversal

If you want to listing all the objects from a bucket, the following lawmaking will generate an iterator for you:

>>>

>>> for obj in first_bucket . objects . all (): ... impress ( obj . key ) ... 127367firstfile.txt 616abesecondfile.txt fb937cthirdfile.txt The obj variable is an ObjectSummary. This is a lightweight representation of an Object. The summary version doesn't support all of the attributes that the Object has. If yous need to access them, employ the Object() sub-resource to create a new reference to the underlying stored key. Then you'll exist able to extract the missing attributes:

>>>

>>> for obj in first_bucket . objects . all (): ... subsrc = obj . Object () ... print ( obj . fundamental , obj . storage_class , obj . last_modified , ... subsrc . version_id , subsrc . metadata ) ... 127367firstfile.txt STANDARD 2018-10-05 15:09:46+00:00 eQgH6IC1VGcn7eXZ_.ayqm6NdjjhOADv {} 616abesecondfile.txt STANDARD 2018-10-05 15:09:47+00:00 WIaExRLmoksJzLhN7jU5YzoJxYSu6Ey6 {} fb937cthirdfile.txt STANDARD_IA 2018-ten-05 15:09:05+00:00 nothing {} You can now iteratively perform operations on your buckets and objects. You're near done. There's 1 more matter you should know at this stage: how to delete all the resources y'all've created in this tutorial.

Deleting Buckets and Objects

To remove all the buckets and objects yous accept created, you must offset make certain that your buckets have no objects within them.

Deleting a Not-empty Saucepan

To be able to delete a bucket, you lot must commencement delete every unmarried object within the bucket, or else the BucketNotEmpty exception volition exist raised. When you have a versioned bucket, you need to delete every object and all its versions.

If yous notice that a LifeCycle dominion that will practice this automatically for you isn't suitable to your needs, here's how you can programatically delete the objects:

def delete_all_objects ( bucket_name ): res = [] bucket = s3_resource . Bucket ( bucket_name ) for obj_version in saucepan . object_versions . all (): res . append ({ 'Key' : obj_version . object_key , 'VersionId' : obj_version . id }) print ( res ) bucket . delete_objects ( Delete = { 'Objects' : res }) The above code works whether or not you have enabled versioning on your bucket. If you haven't, the version of the objects will be nothing. You tin batch upward to 1000 deletions in i API call, using .delete_objects() on your Bucket example, which is more toll-effective than individually deleting each object.

Run the new part confronting the starting time bucket to remove all the versioned objects:

>>>

>>> delete_all_objects ( first_bucket_name ) [{'Key': '127367firstfile.txt', 'VersionId': 'eQgH6IC1VGcn7eXZ_.ayqm6NdjjhOADv'}, {'Key': '127367firstfile.txt', 'VersionId': 'UnQTaps14o3c1xdzh09Cyqg_hq4SjB53'}, {'Key': '127367firstfile.txt', 'VersionId': 'null'}, {'Key': '616abesecondfile.txt', 'VersionId': 'WIaExRLmoksJzLhN7jU5YzoJxYSu6Ey6'}, {'Key': '616abesecondfile.txt', 'VersionId': 'aught'}, {'Key': 'fb937cthirdfile.txt', 'VersionId': 'null'}] As a final test, you tin upload a file to the 2d bucket. This bucket doesn't take versioning enabled, and thus the version volition be null. Apply the same function to remove the contents:

>>>

>>> s3_resource . Object ( second_bucket_name , first_file_name ) . upload_file ( ... first_file_name ) >>> delete_all_objects ( second_bucket_name ) [{'Key': '9c8b44firstfile.txt', 'VersionId': 'zippo'}] You've successfully removed all the objects from both your buckets. You lot're now ready to delete the buckets.

Deleting Buckets

To finish off, you'll utilise .delete() on your Saucepan example to remove the outset bucket:

s3_resource . Bucket ( first_bucket_name ) . delete () If you desire, you lot tin use the client version to remove the second bucket:

s3_resource . meta . client . delete_bucket ( Bucket = second_bucket_name ) Both the operations were successful because you emptied each bucket before attempting to delete it.

You've now run some of the nigh important operations that you tin can perform with S3 and Boto3. Congratulations on making it this far! Equally a bonus, let's explore some of the advantages of managing S3 resources with Infrastructure equally Code.

Python Code or Infrastructure as Code (IaC)?

Every bit you've seen, most of the interactions you've had with S3 in this tutorial had to exercise with objects. Yous didn't run across many bucket-related operations, such as adding policies to the bucket, adding a LifeCycle rule to transition your objects through the storage classes, annal them to Glacier or delete them altogether or enforcing that all objects be encrypted by configuring Bucket Encryption.

Manually managing the state of your buckets via Boto3'southward clients or resources becomes increasingly difficult as your application starts adding other services and grows more than complex. To monitor your infrastructure in concert with Boto3, consider using an Infrastructure equally Lawmaking (IaC) tool such as CloudFormation or Terraform to manage your application's infrastructure. Either i of these tools will maintain the state of your infrastructure and inform you of the changes that yous've applied.

If you make up one's mind to become down this road, go along the following in mind:

- Whatsoever bucket related-performance that modifies the bucket in any way should be done via IaC.

- If you desire all your objects to act in the same way (all encrypted, or all public, for example), usually there is a way to exercise this directly using IaC, by adding a Bucket Policy or a specific Bucket holding.

- Bucket read operations, such as iterating through the contents of a bucket, should exist washed using Boto3.

- Object-related operations at an individual object level should exist done using Boto3.

Decision

Congratulations on making it to the cease of this tutorial!

You're now equipped to offset working programmatically with S3. Yous now know how to create objects, upload them to S3, download their contents and alter their attributes straight from your script, all while fugitive mutual pitfalls with Boto3.

May this tutorial exist a stepping rock in your journeying to building something bang-up using AWS!

Farther Reading

If you want to larn more, bank check out the following:

- Boto3 documentation

- Generating Random Data in Python (Guide)

- IAM Policies and Bucket Policies and ACLs

- Object Tagging

- LifeCycle Configurations

- Cross-Region Replication

- CloudFormation

- Terraform

Scout At present This tutorial has a related video course created by the Real Python team. Scout information technology together with the written tutorial to deepen your understanding: Python, Boto3, and AWS S3: Demystified

Source: https://realpython.com/python-boto3-aws-s3/

Posting Komentar untuk "Reading and Writing Aws S3 Bucket With Python"